illumex Omni was created to streamline your organization’s interaction with Large Language Models (LLMs), like Llama2, or LLM Services, like GPT-4. Seamlessly integrated within your organizational chat workspace (like Slack), Omni enables real-time, reliable data querying with an enhanced contextual understanding and applied governance. Using a domain-specific engine that combines LLM and graphs, Omni interprets your prompts, matches them to your organization’s unique business data semantics, and auto-generates SQL queries. This ensures your questions are not only well-understood but are also directed to the appropriate data assets, effectively minimizing the risks associated with generative AI hallucinations and ambiguous outputs.

When you pose a data question, Omni engages immediately. Leveraging illumex’s Generative Semantic Fabric, it maps the question to the corresponding semantic and data objects. The query is then translated into SQL format and all of the above is forwarded to your selected LLM as a context. To maintain transparency, Omni interacts with you throughout this process, offering clarifications if your query seems ambiguous and even suggesting relevant follow-up questions. All of that is according to the augmented governance flows defined in the platform.

In essence, Omni acts as your business-context-aware interpretive layer between you and the LLM, ensuring that the data retrieved is both accurate and relevant to your organizational context. This makes illumex Omni an invaluable asset for organizations aiming to harness the power of generative AI without compromising their specific business language and data governance structures.

1. Getting Started

Omni is ready for use right out of the box—no special configurations or onboarding required. Once installed:

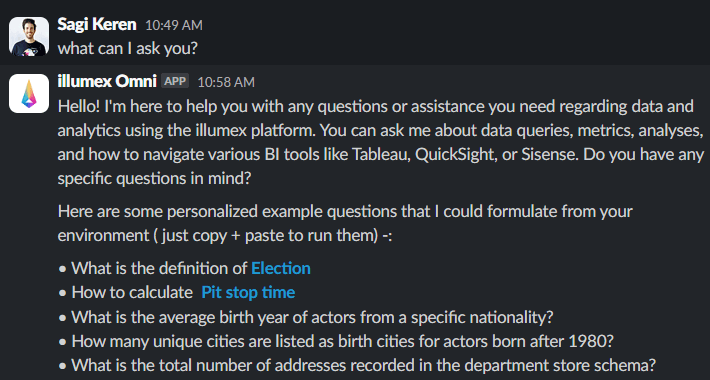

- Simply type your question directly – You can ask definition questions (e.g., “What is a DAU?”) or analytical questions (e.g., “Show me the sales gross margin for the last quarter”).

- Are you feeling overwhelmed? You can always type “what can I ask you?” in the app’s channel to get your own personalized examples of Omni’s capabilities.

🏆 Pro Tip: Bookmark the app in your Slack workspace for quick access by ☆ the conversation.

2. How to Use Omni

Omni offers a range of features to help streamline your analytic tasks. Here’s how you can use some of the key functions:

- Information Retrieval: provide users with precise and factual information to specific business-related inquiries.

Typically involves direct questions requesting definitions or explanations of business terms, like: “what’s the description of …”, “how do we define …” , “what is …” - Analytical Translation: interpret and provide reasoning to an analytical query.

Typically involves explanatory requests where users ask for the answer of a non-technical data representation, like: “calculate the …”, “list all …”, “count how many …”, “what is our …”

Omni is also designed to offer maximum flexibility based on your preferences:

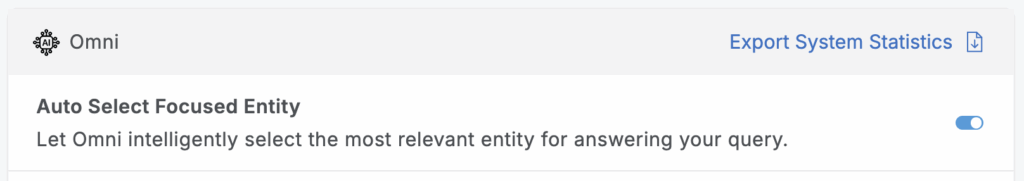

- Auto Select – Full Autonomous Response

- Omni automatically interprets your question and provides the most relevant answer based on its understanding, without any follow up questions.

- This is perfect for quick queries where you want instant insights without having to be familiar with your data model or catalog.

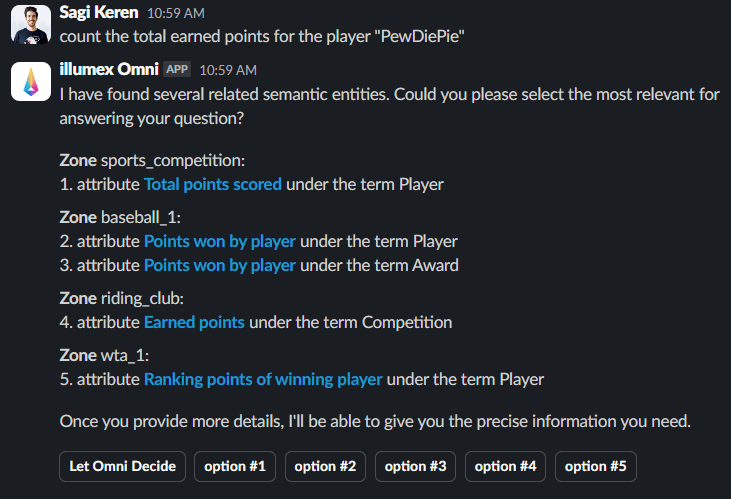

- User Select – User’s Interactive Selection

- If you prefer more control, Omni always presents multiple relevant options based on your question.

- You can then select the specific information that best matches your needs using interactive buttons.

- Not sure which option best fits your question? You can always click on “Let Omni Decide” for an intelligent autonomous response.

🏆Pro Tip: Need a better understanding of one of the listed options? Use the highlighted links to visit the entity in illumex’s platform, for further insights.

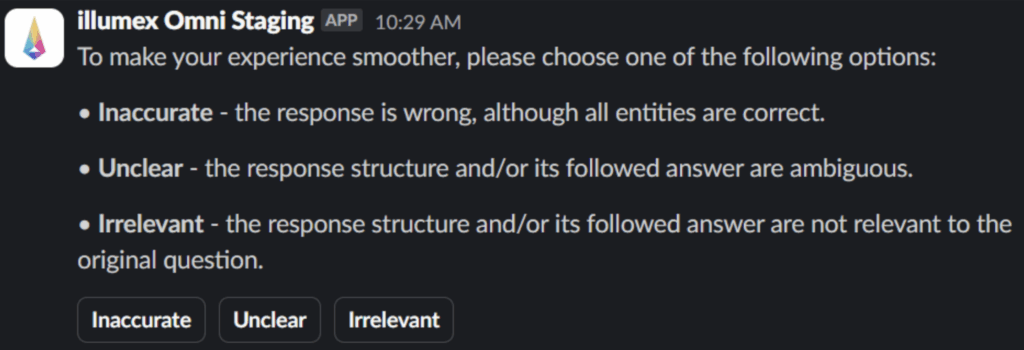

Each response from Omni can get reacted with 👍 (thumbs-up) and 👎 (thumbs-down) emojis. Use these to provide quick feedback:

- Thumbs Up (👍) helps reinforce helpful or accurate answers.

- Thumbs Down (👎) allows you to flag responses that are incorrect or unhelpful. You’ll also have the option to suggest a better reply, enabling Omni to improve and better align with your expectations.